Business

Superhuman AI: Hype or Reality? The Growing Divide Between Tech Leaders and Researchers

The race toward superhuman artificial intelligence (AGI) is heating up, with tech executives like OpenAI’s Sam Altman and Anthropic’s Dario Amodei predicting machines could surpass human intelligence by 2026 or sooner. Yet, many AI researchers dismiss these claims as corporate hype, arguing that today’s AI systems are far from achieving true general intelligence.

The AGI Debate: Optimism vs. Reality

AGI—Artificial General Intelligence—refers to AI that can outperform humans in nearly every cognitive task. While industry leaders suggest AGI is just around the corner, academics remain doubtful.

- Sam Altman (OpenAI): Claims AGI is “coming into view.”

- Dario Amodei (Anthropic): Predicts AGI could arrive by 2026.

- Yann LeCun (Meta): Argues scaling up current AI models won’t lead to human-level intelligence.

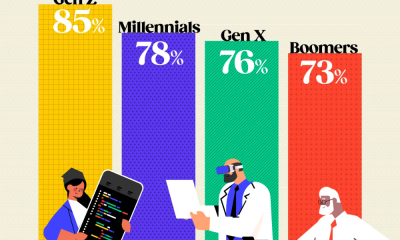

A recent AAAI survey found that over 75% of researchers believe today’s AI techniques won’t produce AGI. So, why the disconnect?

Corporate Hype or Genuine Breakthrough?

Critics argue that AI companies have financial incentives to fuel AGI excitement.

“They’ve made big investments, and they have to pay off,” says Kristian Kersting, an AI researcher at TU Darmstadt. “By framing AGI as both revolutionary and dangerous, they position themselves as the only ones who can control it.”

Some compare the rhetoric to Goethe’s “The Sorcerer’s Apprentice”—a story about losing control of a powerful force. Others reference the “paperclip maximizer” thought experiment, where an AI could unintentionally destroy humanity in pursuit of a trivial goal.

Near-Term Risks vs. Sci-Fi Fears

While debates rage over distant AGI threats, experts warn that existing AI poses real dangers today:

- Algorithmic bias in hiring, lending, and law enforcement

- Misinformation from AI-generated content

- Job displacement due to automation

Sean O hEigeartaigh (Cambridge University) suggests that even if AGI is decades away, we should prepare now:

“If there were a 10% chance aliens would arrive by 2030, we’d plan for it. AGI could be the biggest thing ever—we can’t ignore it.”

The Challenge: Making AI Risks Believable

One problem? Superintelligent AI sounds like science fiction. Policymakers and the public struggle to take it seriously. Yet, as AI advances, the line between hype and reality grows thinner.

The Bottom Line:

While tech leaders push AGI as the next revolution, researchers urge caution. The real question isn’t just when AGI arrives—but whether it’s even possible with today’s tech.

What do you think—is superhuman AI imminent, or just another Silicon Valley sales pitch?

{Source IOL}

Follow Joburg ETC on Facebook, Twitter , TikTok and Instagram

For more News in Johannesburg, visit joburgetc.com